All the Data

There are trillions of data points freely available through public APIs on the internet, covering almost any topic in every possible format. For the curious, this represents a vast source of insight and discovery if you can access and interpret it.

The challenge is that raw data is meaningless without expertise. To use it, you need to code, understand API documentation, and actually know what you might be looking for.

You may think, “I’ll just ask an LLM.” But there’s a catch. Models like Claude or ChatGPT can’t access all the real-time data you want it to. They rely on fixed training sets with cutoff dates, so their answers about recent trends or statistics are often outdated or incomplete.

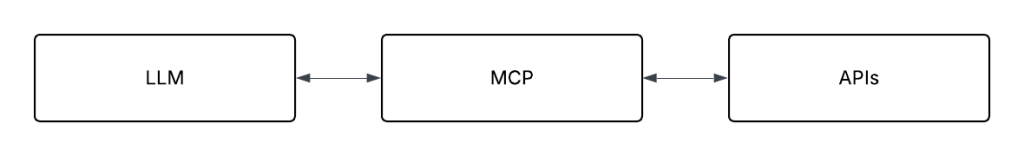

The result is that public data remains out of reach for most people, available to all but usable only by those with technical skills. As represented by this terrible illustration:

The Solution: Model Context Protocol (MCP)

What is the MCP?

These can be incredibly powerful and complex – think smart home interactions, robotics and all sorts of exciting applications.

For our purposes though, MCP will create a bridge between AI assistants and external data sources.

In the following example the LLM communicates with the MCP server over the STDIO and the server then fetches data from the API via HTTPS. Simple as that.

Building the MCP Server: A Step-by-Step Guide

We’ll use the APIs provided by the UK Police in our example, concentrating on stop and search data. It’s publicly available, well-structured, and demonstrates the insight concept perfectly.

For this tutorial, we’ll write our MCP server in Python and connect it to Claude Desktop. Claude makes adding MCP servers pretty easy (just edit a config file), and it provides a clean graphical interface for viewing results making it perfect for exploring and visualising data.

The API

Let’s start by look at what this specific API request and response looks like.

The documentation linked above tells us we need to call the API with the month, year and UK police force whose data we’re interested in:

GET: https://data.police.uk/api/stops-force?date=2024-01&force=leicestershireThe response gives us a list of reports:

[

{

"age_range": "18-24",

"officer_defined_ethnicity": null,

"involved_person": true,

"self_defined_ethnicity": "Other ethnic group - Not stated",

"gender": "Male",

"legislation": null,

"outcome_linked_to_object_of_search": null,

"datetime": "2024-01-06T22:45:00+00:00",

"outcome_object": {

"id": "bu-no-further-action",

"name": "A no further action disposal"

},

"location": {

"latitude": "52.628997",

"street": {

"id": 1738518,

"name": "On or near Crescent Street"

},

"longitude": "-1.130273"

},

"object_of_search": "Controlled drugs",

"operation": null,

"outcome": "A no further action disposal",

"type": "Person and Vehicle search",

"operation_name": null,

"removal_of_more_than_outer_clothing": false

},

....

]

Each record returned contains when and where something happened, demographic information, what was being searched for, and the outcome. Now let’s build a server that makes this data easy to access.

Getting Set Up

First, let’s get our development environment ready. We’ll use uv, a modern Python package manager that’s dramatically faster than pip.

Install uv:

On macOS/Linux:

curl -LsSf https://astral.sh/uv/install.sh | shOn Windows:

powershell -c "irm https://astral.sh/uv/install.ps1 | iex"Create your project:

# Create a new directory and initialise the project

mkdir uk-police-mcp

cd uk-police-mcp

uv init

# Add the dependencies we need

uv add fastmcp httpxThat’s it! uv will automatically create a virtual environment and install FastMCP (our MCP framework) and httpx (for making HTTP requests to the API).

Create your server file:

Create a file called server.py in your project directory. This is where we’ll write all our code.

About FastMCP – Don’t Reinvent the Wheel

MCP is the protocol (the standard for how AI assistants and data sources communicate). It’s not too complicated to work with but using a framework to help implement it makes things easier. That’s where FastMCP comes in.

It’s a Python library that makes building MCP servers really simple. Created by Marvin AI, it handles all the protocol complexity: authentication, message formatting, error handling, and communication. This means you can focus on your data logic instead of protocol details.

Why use it?

- Simple API: Define a tool with a single decorator (

@mcp.tool()) - Type safety: It uses Python type hints for automatic validation

- Async support: Built for modern async Python

- Minimal boilerplate: You write the data logic and it handles everything else

Step 1: Initialise the Server

First, we need to create our MCP server and give it a name. This sets up the foundation that will handle all communication with the LLM.

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("UK Police Stop and Search")That’s it! FastMCP handles all the communication protocols behind the scenes. (the default is via STDIO i.e. standard input/output channels)

Step 2: Define the First Tool

In MCP, a “tool” is a function that Claude can call. Think of it like creating a specialised app that the LLM can “install” and use whenever it needs this data. Let’s create our main data fetching tool.

@mcp.tool()

async def get_stop_and_search_data(

year: int,

month: int,

force: str = "metropolitan"

) -> dict:

"""

Fetch stop and search data from the UK Police Database.

Args:

year: Year (e.g., 2024)

month: Month (1-12)

force: Police force name (default: metropolitan)

"""What’s happening:

@mcp.tool()registers this function with FastMCP- The docstring tells the LLM what this tool does and how to use it

- Type hints (int, str, dict) help the LLM understand what parameters it needs to provide

Step 3: Validate Inputs

Before we call the API, check that the inputs make sense.

if not 1 <= month <= 12:

return {"error": "Invalid month. Must be between 1 and 12."}

current_year = datetime.now().year

if year < 2010 or year > current_year:

return {"error": f"Invalid year. Must be between 2010 and {current_year}."}Step 4: Fetch Data from the API

Now we’re ready to actually get the data. We format the date correctly, build the URL, and make the request. Using async/await means this won’t block other operations whilst waiting for the API to respond.

date_str = f"{year}-{month:02d}"

url = "https://data.police.uk/api/stops-force"

params = {"date": date_str, "force": force}

async with httpx.AsyncClient(timeout=30.0) as client:

response = await client.get(url, params=params)

response.raise_for_status()

data = response.json()Step 5: Process and Enhance the Data

Raw data is overwhelming. If you got thousands of individual records, you’d struggle to make sense of them. Let’s compute useful statistics automatically.

total_searches = len(data)

# Age range breakdown

age_ranges = {}

for record in data:

age = record.get("age_range", "Unknown")

age_ranges[age] = age_ranges.get(age, 0) + 1

# Gender breakdown

genders = {}

for record in data:

gender = record.get("gender", "Unknown")

genders[gender] = genders.get(gender, 0) + 1

# Outcome breakdown

outcomes = {}

for record in data:

outcome = record.get("outcome", "Unknown")

outcomes[outcome] = outcomes.get(outcome, 0) + 1

return {

"date": date_str,

"force": force,

"total_records": total_searches,

"summary": {

"age_ranges": age_ranges,

"genders": genders,

"outcomes": outcomes

},

"records": data

}Step 6: Handle Errors Gracefully

APIs fail. Networks drop. Servers have bad days. We’ll wrap everything in error handling so the tool returns helpful messages instead of crashing.

@mcp.tool()

async def get_stop_and_search_data(year: int, month: int, force: str = "metropolitan") -> dict:

"""..."""

# Validation code here

try:

# Fetch and process data here

pass

except httpx.HTTPStatusError as e:

return {"error": f"HTTP error: {e.response.status_code}"}

except httpx.RequestError as e:

return {"error": "Request failed", "message": str(e)}Step 7: Add Helper Tools

Let’s create some additional tools to help users discover what’s available and get quick overviews without downloading thousands of records.

@mcp.tool()

async def list_available_forces() -> dict:

"""List commonly available police forces in the UK."""

return {"forces": ["metropolitan", "greater-manchester", "west-midlands", ...]}

@mcp.tool()

async def get_search_statistics(year: int, month: int, force: str = "metropolitan") -> dict:

"""Get summary statistics without full records."""

result = await get_stop_and_search_data(year, month, force)

return {

"date": result["date"],

"force": result["force"],

"total_records": result["total_records"],

"summary": result["summary"]

}Step 8: Run the Server

Finally, add the code that actually starts the server. When you run the Python file, it launches and waits for our LLM to connect.

if __name__ == "__main__":

mcp.run()Step 9: Connecting to Claude

To connect your server to Claude Desktop navigate to Settings > Developer > Edit Config and add this to the JSON file

{

"mcpServers": {

"uk-police": {

"command": "uv",

"args": [

"--directory",

"/path/to/uk-police-mcp",

"run",

"server.py"

]

}

}

}Restart Claude and you’re good to go! You can check everything is working by asking Claude “Tell me about your MCP servers” and it should give an overview of it’s new-found capabilities.

Taking it for a whirl

Now the interesting bit, let’s stretch Claude’s legs by asking it to do some investigative journalism.

Here’s the prompt I went with:

You are an investigative journalist with access to a dataset of UK police stop-and-search records. Your goal is to find clear, factual patterns that could become short newspaper headlines or charts. Looks for data or patterns that might be surprising and not be common knowledge for most readers.

After a bit of thinking and cajoling, Claude (Sonnet 4.5, i.e. the free version) came up with the following report – Link to the full website

Wrapping up

And there you have it – proof of what’s possible when LLMs get real data to explore. I encourage you to build something, anything and enjoy the journey!

The full source code is here